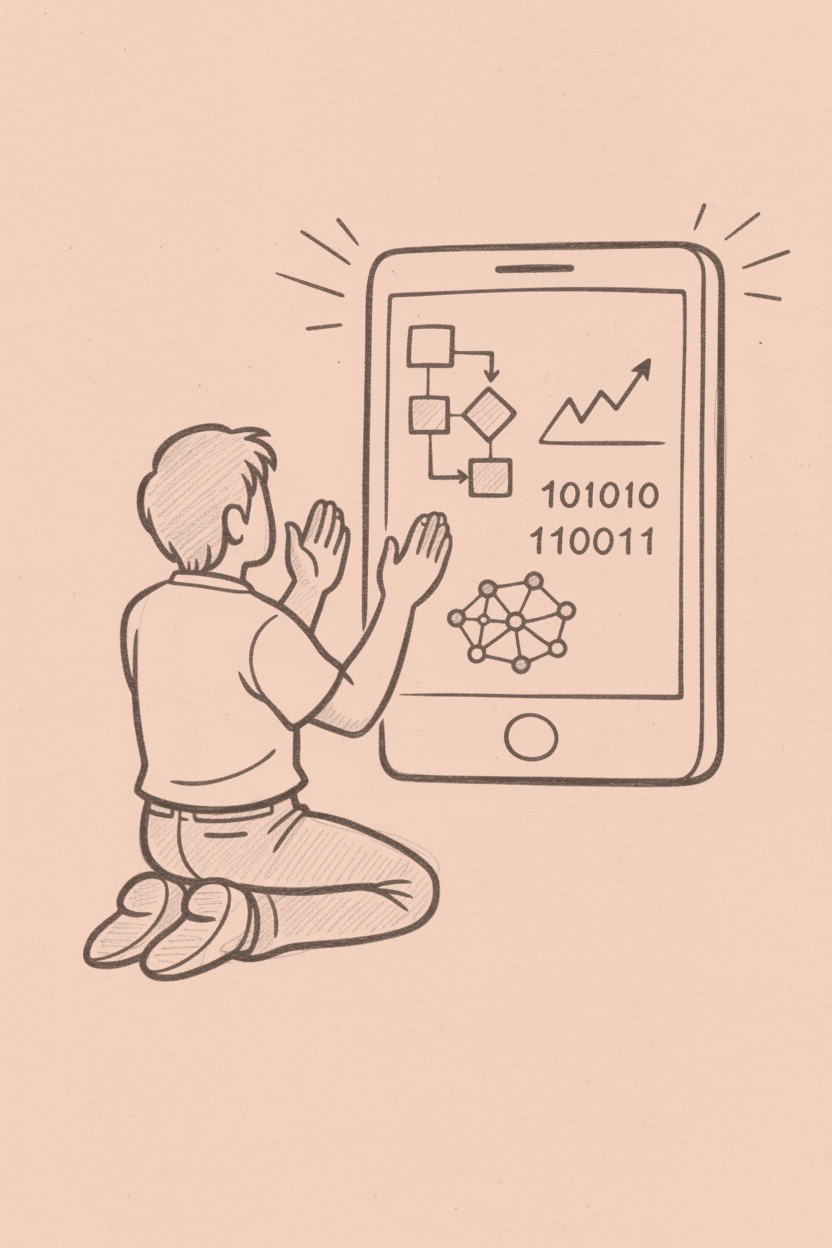

Algorithmic Folk Beliefs

The rituals we've developed to appease systems we don't understand

Rydra posts at 7:23 PM with religious devotion. Three months ago, a video at that exact time went viral. Correlation, causation—who can say? The algorithm might have favored the content, the sound, the hashtags, or perhaps cosmic alignment. Now Rydra tracks every variable in a spreadsheet that would make a quantified-self evangelist weep: hashtag count,…