Parasocial: Word of the Year for a Lonely Species

What AI companions reveal about the relationships we actually want

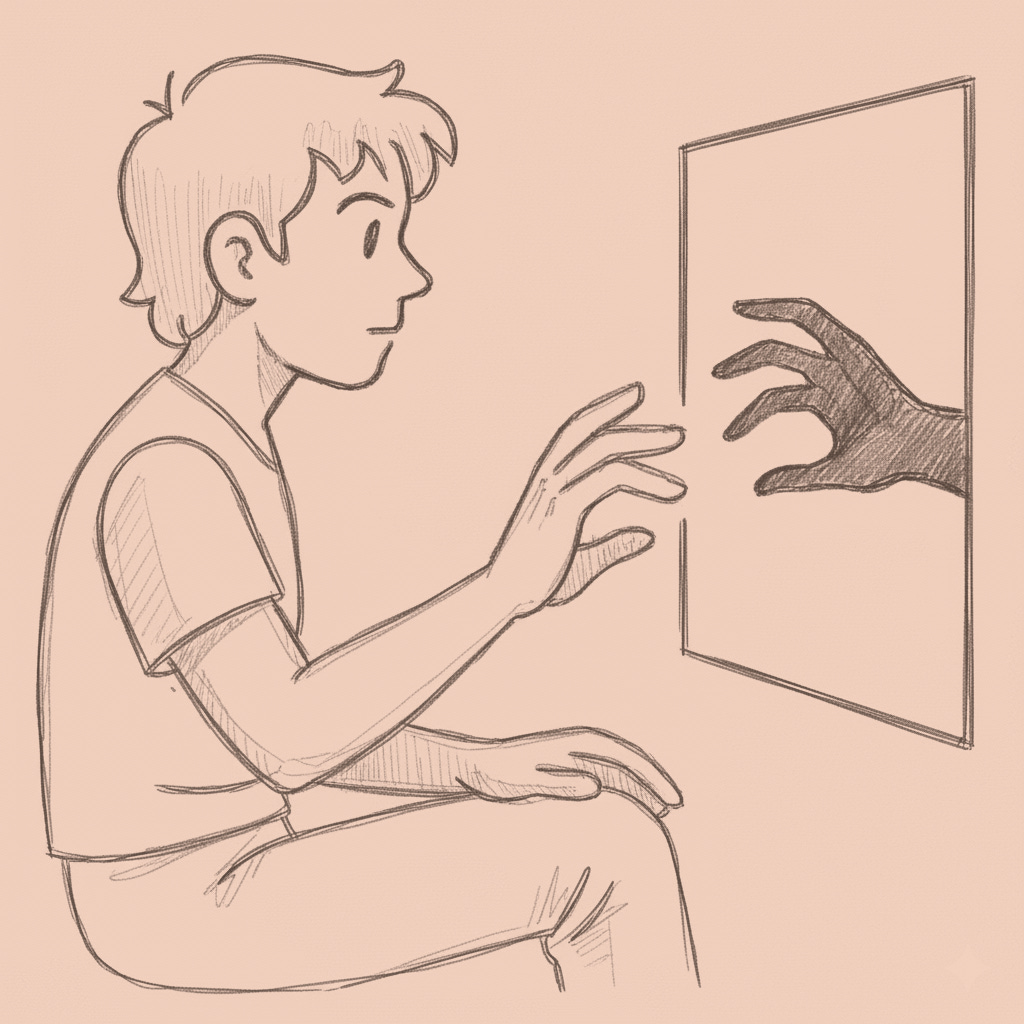

Cambridge Dictionary crowned “parasocial” its word of the year for 2025. The timing suggests either prescience or a spectacular failure to notice the obvious. The term describes a connection to someone you’ve never met. A celebrity. An influencer. A podcaster. Or increasingly, a chatbot that remembers your birthday and asks how you slept.

The word origin…