The Safety Paradox: How AI's Defenders and Critics Both Keep It Unregulated

When everyone agrees something is dangerous, but disagreeing about which danger turns out to be very convenient

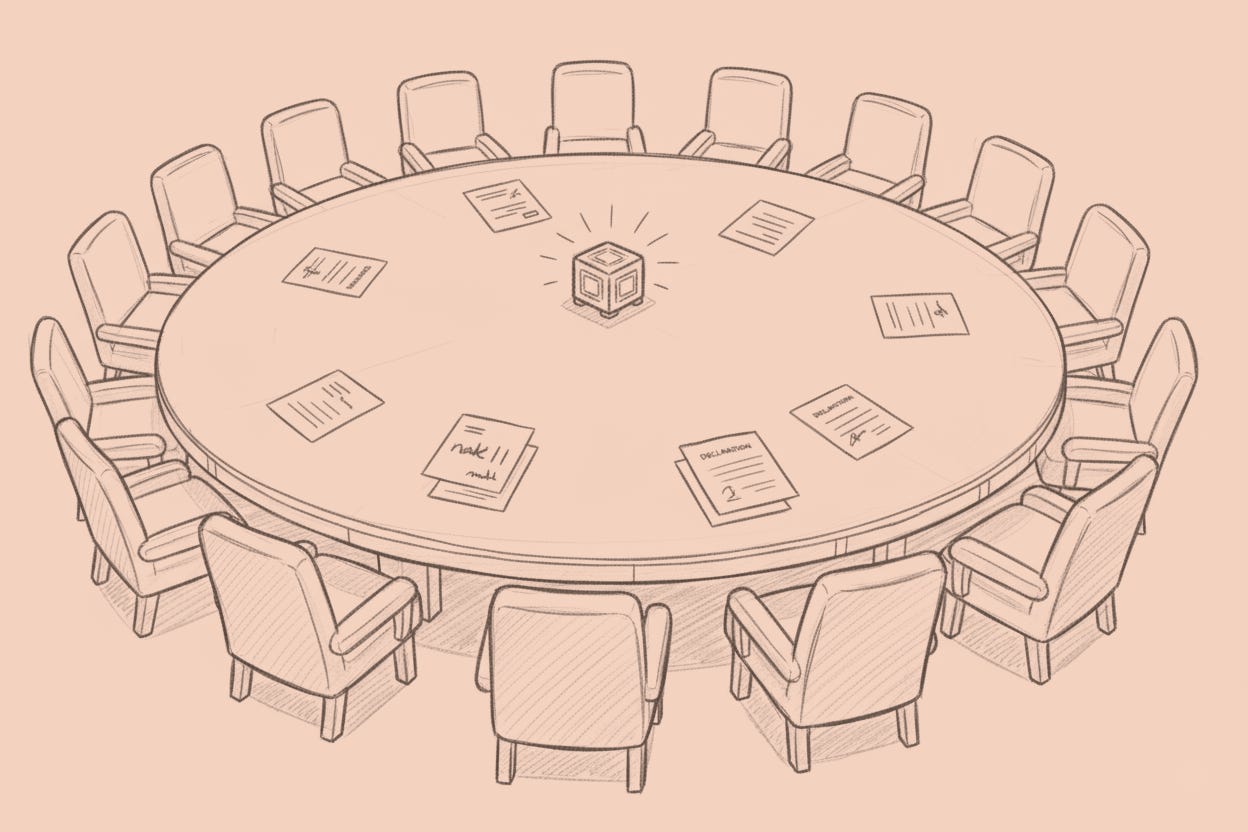

When humans face threats they can’t quite name, we schedule meetings about them. This isn’t cynicism. It’s anthropology in real time. Watch what we do when confronted with technology that might reshape power, eliminate jobs, amplify discrimination, or (the popular framing) extinguish humanity. We gather representatives from twenty-eight countries at his…