The Democratization Theater: What Open Source AI Reveals About Who Gets to Play

When tech giants give away the tools but keep the keys, calling it "open" becomes its own kind of power move.

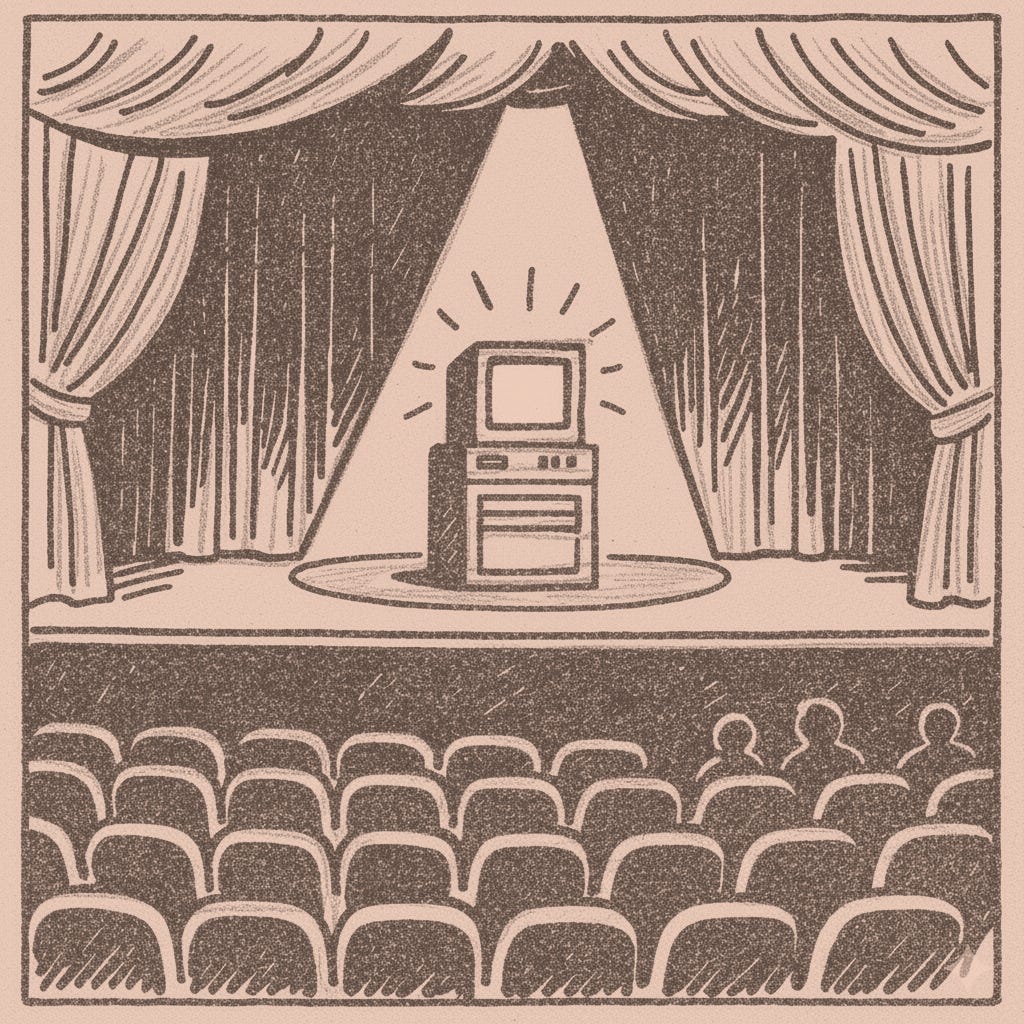

47,000 stars on GitHub. Compute costs that exceed your annual salary. The numbers climb in opposite directions. This is democratization theater, and we’re all buying tickets.

Google released TensorFlow as open source in November 2015. The press releases emphasized accessibility and collaboration. The infrastructure required to train models at Google’s sc…