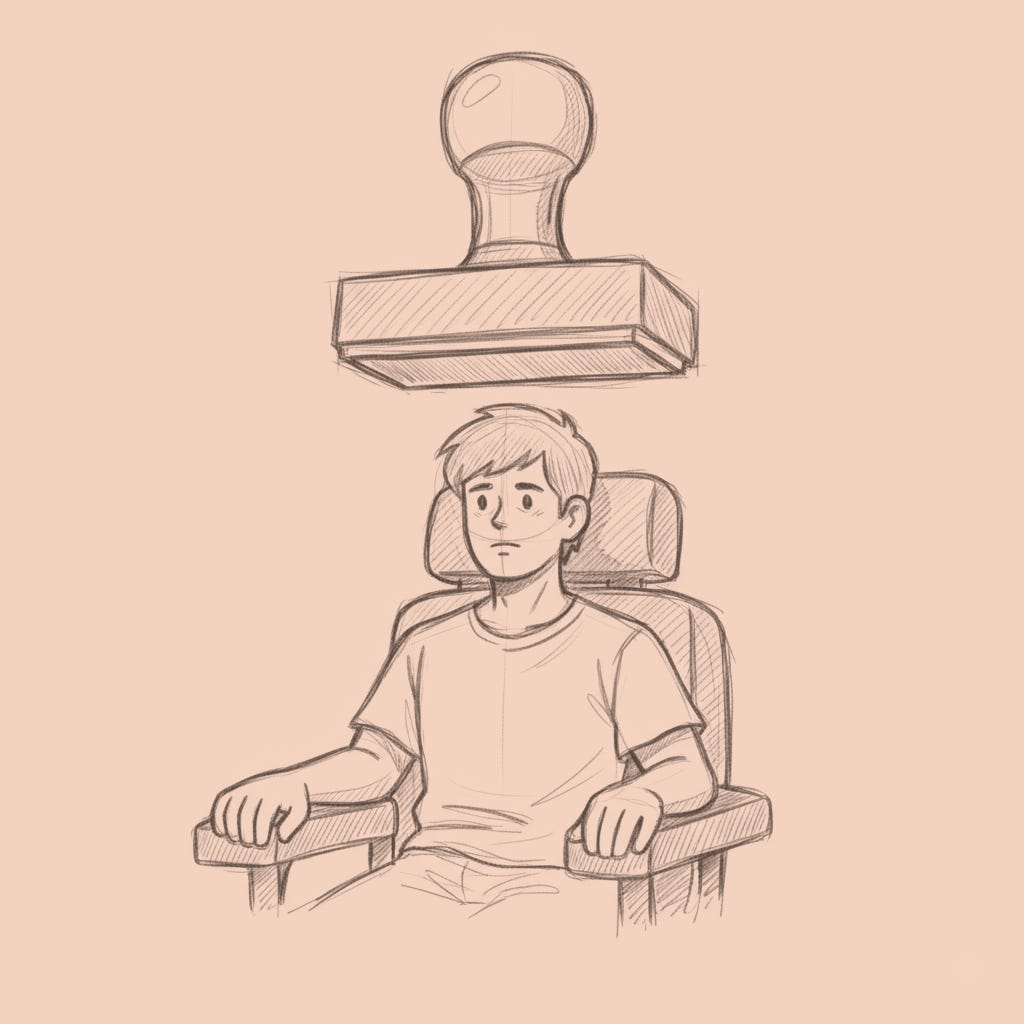

The Voight-Kampff Moment

When proving you're human becomes your problem, not theirs

In Philip K. Dick’s Do Androids Dream of Electric Sheep?, the Voight-Kampff test measured capillary dilation and flush response while administrators asked about dying wasps. It assumed replicants couldn’t simulate the involuntary markers of empathy. The burden of proof sat with the examiner. The android just had to exist.

That architecture is inverting. …