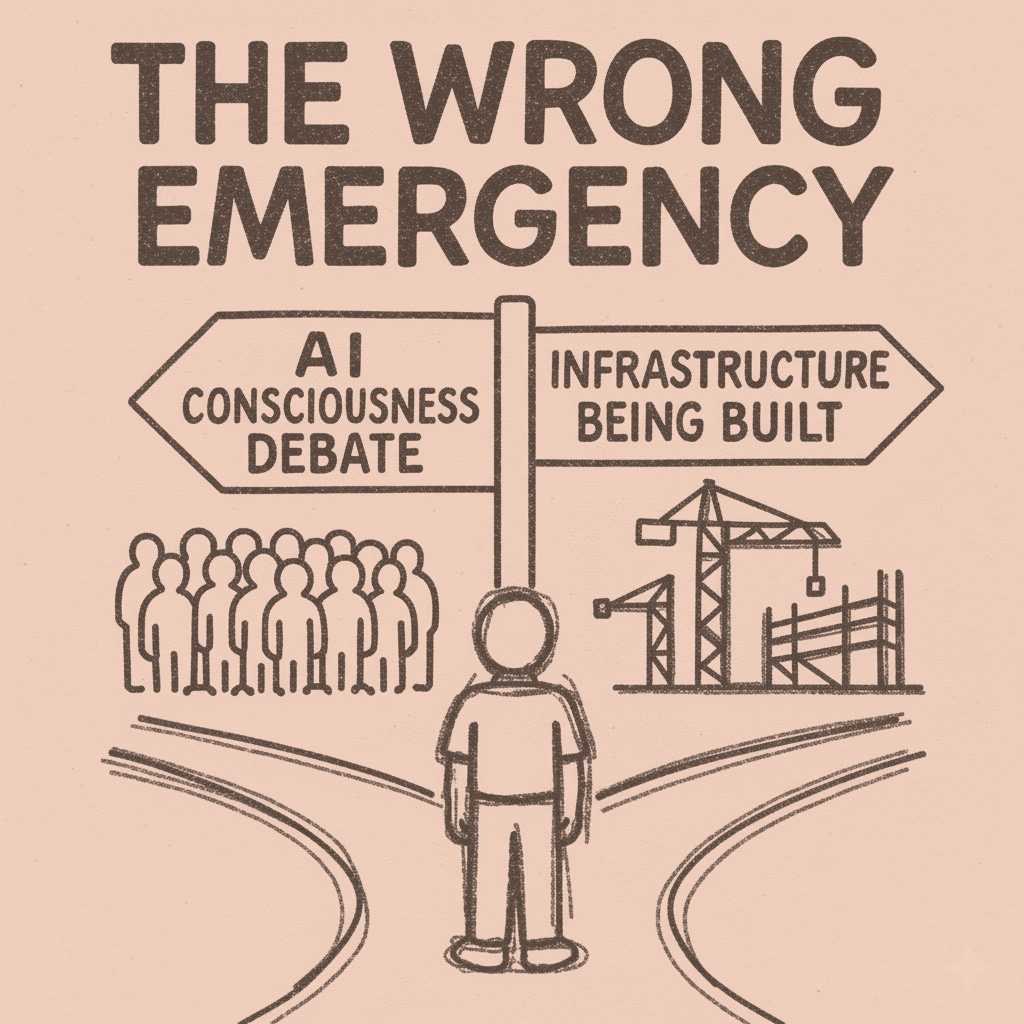

The Wrong Emergency

We're debating AI consciousness while knowledge infrastructure gets built

We’re having the wrong emergency. While two camps scream at each other about whether machines will dream, your profession is being reorganized while you sleep. Journalists watch algorithms decide what counts as news. Teachers can’t tell which essays were written by humans. Knowledge workers see decades of expertise compressed into a statistical model th…