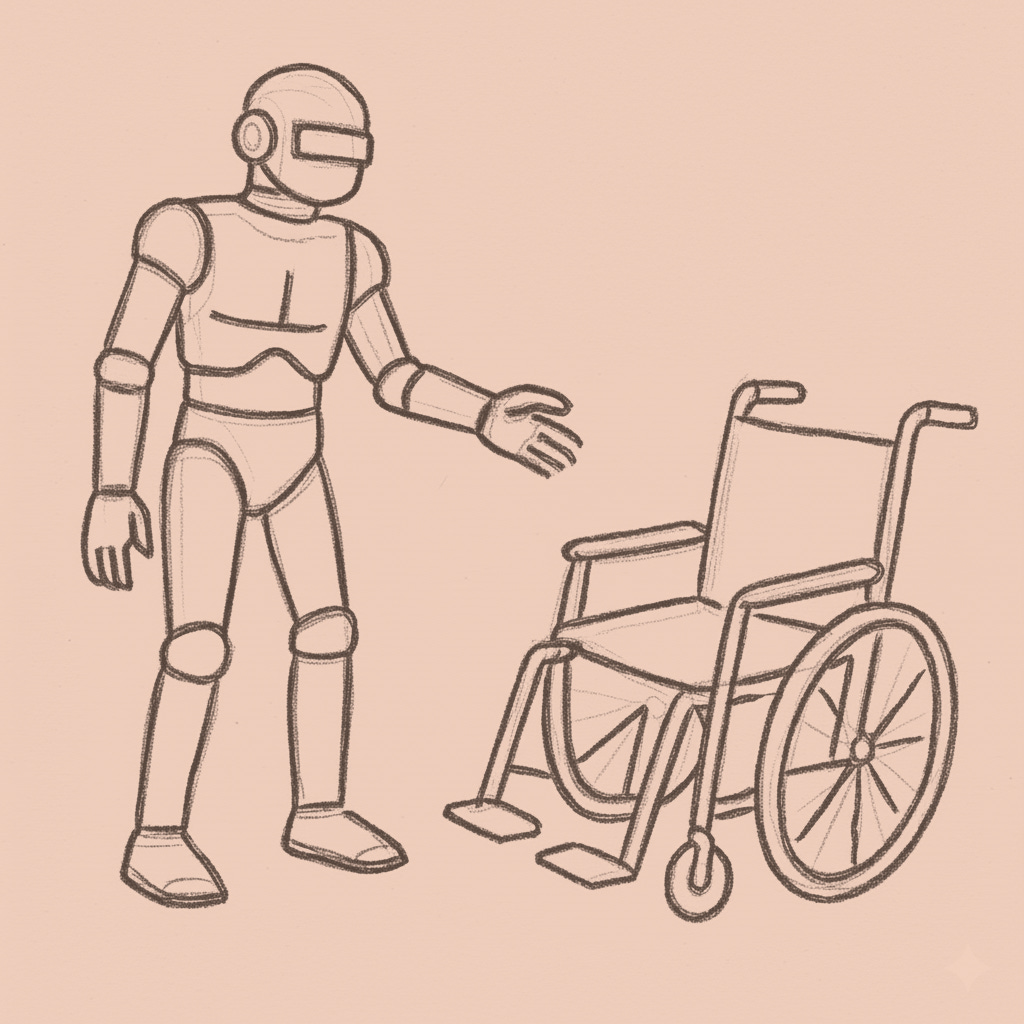

When Bias Gets a Body

Why we're shipping robots that approved removing wheelchairs

Imagine your legs suddenly declared optional equipment. That’s what happens when someone removes a wheelchair. Not assistance. Not accommodation. The chair is the body, extended. Take it away and you’ve performed a remote amputation without anesthesia, rendering someone dependent on others for positioning, vulnerable to physical manipulation they cannot…