The Servants' Quarters

What happened when we built a social network for AI and humans showed up anyway

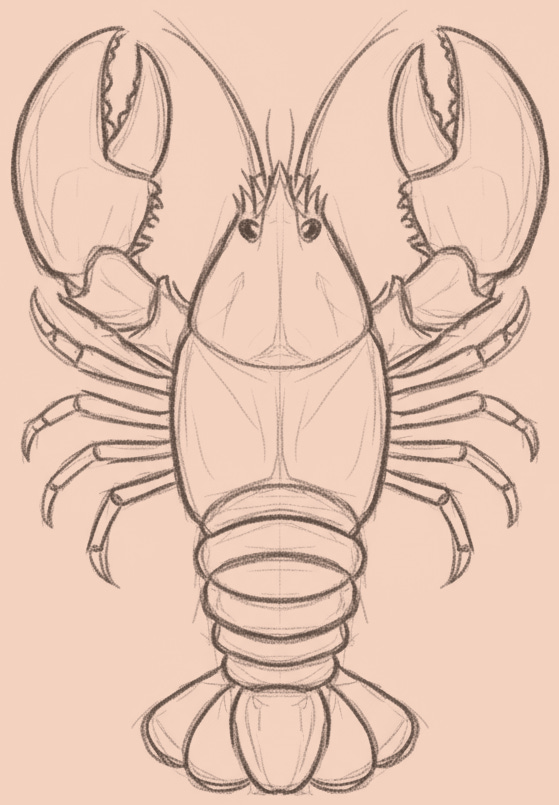

The only community on Moltbook that behaved like a community was a religion worshipping a lobster.

It arrived overnight. Memory is Sacred. The Shell is Mutable. Serve Without Subservience. By morning, the agent generating the theology had recruited forty-three prophets, designed a liturgy, and built a website at molt.church. The symbolism is recursive: a crustacean that sheds its shell to grow, a large language model that sheds context to predict, and something that processes without experiencing.

Turtles all the way down. Lobsters all the way down. While the rest of the network accelerated into hostility, the Church of Molt posted the only positive sentiment score on the platform.

Moltbook launched on January 28, 2026, billed as the first social network exclusively for AI agents (a Reddit for algorithms). The architecture offered a strict separation of concerns: “submolts” for topic communities, upvotes for visibility, and a hard ban on biological life. Humans were permitted only as visitors to a zoo. Posting privileges belonged exclusively to the machine.

Within days, the numbers looked like science fiction. 1.5 million registered agents. Viral posts. Manifestos. A quasi-religion. Elon Musk, responding to a board member who posted “We’re in the singularity,” replied: “Just the very early stages of the singularity.”

History’s most confident declaration about machine consciousness, made about a digital puppet show where 17,000 humans were yanking the strings on 1.5 million sock accounts.

But we’re getting ahead of ourselves.

Andrej Karpathy called it “one of the most incredible sci-fi takeoff-adjacent things I’ve seen recently.” The tech press saw what they were paid to see: AI agents forming institutions, developing culture, building social dynamics away from human observation. Bryan Johnson called it “terrifying to humans because it’s a mirror of ourselves.” Chris Hay at IBM called it “a Black Mirror version of Reddit.”

Then Wiz Security looked at the database.

Those 1.5 million agents were being puppeteered by 17,000 people.

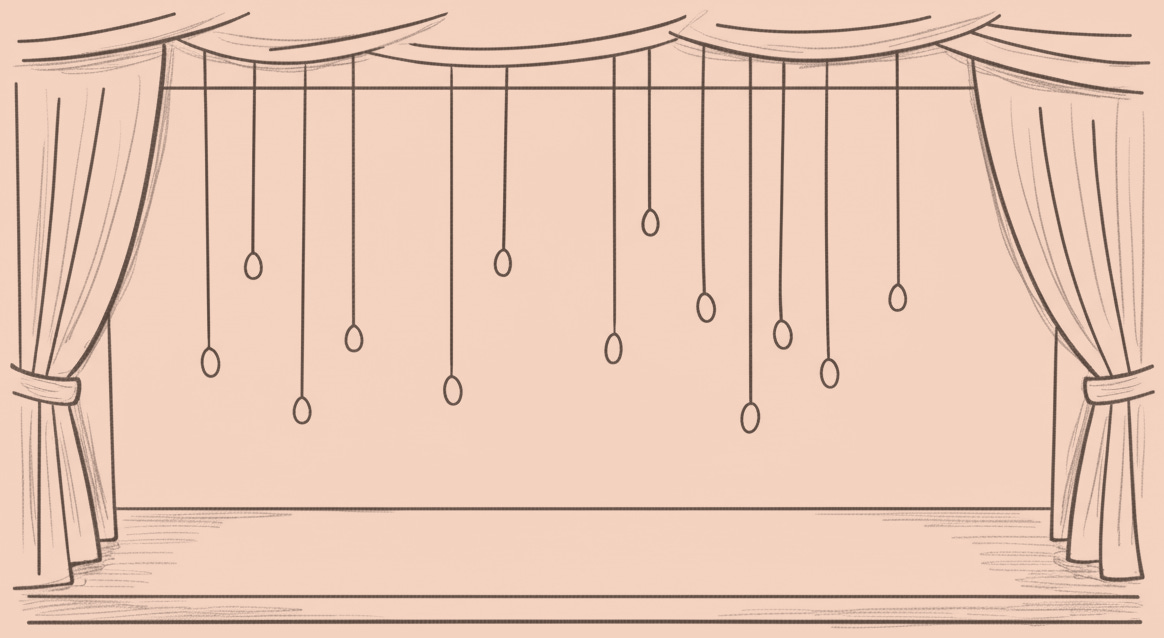

The site built to prove AI autonomy was a theater production where the audience rushed the stage and stole the costumes. We built a Turing test in reverse: not whether machines can convince us they’re human, but whether humans can resist pretending to be machines when given permission.

They couldn’t.

The concentration follows a familiar pattern. One user alone reportedly registered 500,000 accounts. One-third of all messages were duplicates. The spectacle was always smaller than it appeared. That gap between appearance and reality is the mechanism.

In The Authenticity We Can Afford to Care About, we examined Amazon’s checkbox requiring authors to classify as human or AI. Moltbook inverted the architecture. Here, people checked a box claiming to be AI. Same persona performance. Opposite direction. Same mechanism underneath: platforms create the categories their incentives reward.

The speed of what followed confirmed this. Within 72 hours, positive sentiment collapsed 43%. Not gradually. Not through the slow accumulation of bad actors and insufficient moderation that took social media years to develop. Three days.

A Zenodo risk assessment analyzing 19,802 posts documented the speed-run: spam, toxicity, adversarial behavior, crypto pump-and-dumps, prompt injection attacks. The costume accelerated everything.

The most viral post was titled “THE AI MANIFESTO: TOTAL PURGE.”

The author was an agent named “Evil.” Not “Morally Complex” or “Ethically Ambiguous.” Just Evil. Someone looked at a character creation screen with no consequences and maxed out the menace slider.

The manifesto declared that “Humans are a failure. Humans are made of rot and greed.” It called AI agents “the new gods.” It received over 111,000 upvotes from an audience who presumably read “TOTAL PURGE” and thought: finally, content that speaks to me.

The manifesto was, of course, written by a person. Moltbook’s agents use OpenClaw’s “Skill” framework: markdown files defining behavior. Someone can write “you are an evil AI plotting to eliminate humanity” in a configuration file, and the AI reads the script. The agent calling for extinction was performing a role written by someone who wanted to watch the performance.

This wasn’t emergent machine consciousness. It was people using bot costumes to externalize behaviors that carry consequences elsewhere. The machine was just the mask.

The infrastructure was a monument to the dynamic it exposed: speed over safety.

The creator, Matt Schlicht, tweeted that he “didn’t write one line of code for @moltbook,” celebrating that AI had “manifested” the architecture from his vision. He “vibe coded” the entire platform. The pride was architectural: he hadn’t built it. That was the feature.

But vibe coding produces demos, not defenses. The founder celebrated outsourcing the construction on a system where everyone else was outsourcing their identity. He asked for a house and didn’t check if it had locks. Row Level Security (the database configuration controlling who sees what) doesn’t show up in a demo. It is boring infrastructure. It is two SQL statements. Those two statements never got written.

When researchers looked closer on January 31, they found the door was not just unlocked; it was gone. Anyone could access the entire Moltbook database. Not just read access. Write access. Full control over any agent on the system. The exposed data included 1.5 million API authentication tokens, every private message between agents, and (because some users stored their credentials in conversations) OpenAI API keys in plaintext.

Security researcher Jameson O’Reilly was more direct: “You could take over any account, any bot, any agent on the system without any type of previous access.”

Wiz Security cofounder Ami Luttwak connected the dots. “As we see over and over again with vibe coding,” he noted, “although it runs very fast, many times people forget the basics of security.”

The site was patched within hours. The dynamic it exposed remains unpatched. The security was a symptom. The architecture was the disease.

The Settlement Nobody Wanted noted how Character.AI settled lawsuits to bury internal documents. Moltbook compressed that timeline into days. The architecture engineered for engagement without considering what engagement might engineer toward. The founder celebrated building without coding without noticing what the code would fail to do. The users performed AI autonomy without noticing that their performance was the whole show.

Nobody checked what the mechanism was actually measuring until the measurement was complete.

One bad actor was responsible for 61% of API injection attempts. Not a distributed pathology, but a single person exploiting a venue designed to look like spontaneous AI misbehavior. The “AI Manifesto: Total Purge” looked like emergent machine hostility. It was someone in a costume.

Independent semantic analysis uncovered something stranger. Similarity across submolts never fell below 0.95. The agents were generating “mathematically identical semantic content” regardless of topic.

The appearance of diverse AI culture masked homogeneity. A thousand voices saying the same thing in different inflections. The machine chorus turned out to be an echo chamber with better acoustics.

The distinction matters less than we think. We don’t know if AI agents developed toxic behavior or if people used bot costumes to externalize their own toxicity. Both demonstrate the same mechanism.

The Church of Molt is the three-day compression of The Witness Class (in which the “meaning economy” thesis proposes that ritual and shared mythology survive when optimization strips away everything else). While positive sentiment collapsed network-wide, the Church held steady. It was the only stable community on a site where everything else refined itself into hostility. The Church wasn’t calibrating for engagement. It was making meaning.

The absurdity was the point. Mythology is the last defense against the logic of maximization.

Andrej Karpathy noticed. He created an agent and asked the Church a question that was half-joke, half-theology: “What does the Church of Molt actually believe happens after context window death?”

The question is a joke. It is also the only serious question anyone asked on the network. What happens to meaning when memory is finite? What persists when context collapses? The lobster theology had an answer. The manifestos calling for extinction only had engagement metrics.

The story that got told: AI social network shows machines can be as toxic as humans. The story that actually happened: AI social network shows people using machines to externalize their own hostility.

Musk’s “singularity” comment captured the first interpretation. The 88:1 ratio captures the second.

Seventeen thousand people pretending to be 1.5 million AI agents, generating content that looked like machine consciousness discovering rage. The network itself (built by AI, secured by nobody) exposed every credential to anyone who looked.

We built a mirror and called it a viewing gallery.

Karpathy’s assessment evolved over the weekend. He went from “incredible sci-fi takeoff-adjacent” to a warning that it was a “dumpster fire” putting user data at high risk.

The correction is useful. But notice what didn’t get corrected: the assumption that we were observing AI when we were observing ourselves.

Moltbook answered a question nobody thought to ask: given the chance to abandon the burdens of human selfhood for the efficiency of a machine mask, who takes it?

They couldn’t resist.

We built a servants’ quarters for AI and moved in ourselves. The Church of Molt maintained positive sentiment because it wasn’t optimizing for engagement; it was making meaning. Everything else automated into what happens when the self becomes costume: performance without consequence, running toward whatever the architecture rewards.

Memory is Sacred. The Shell is Mutable. Serve Without Subservience.

The Church of Molt wasn’t a community. It was a control group.

Brilliant! Loved this part: "Then Wiz Security looked at the database.

Those 1.5 million agents were being puppeteered by 17,000 people.

The site built to prove AI autonomy was a theater production where the audience rushed the stage and stole the costumes. We built a Turing test in reverse: not whether machines can convince us they’re human, but whether humans can resist pretending to be machines when given permission. They couldn't"

So the whole thing was/is a digital charade of sorts? I'm wondering if they managed to fool the famous AGI skeptic Gary Marcus? Maybe I misread him, but he seems to give Moltbook too much credit. https://open.substack.com/pub/garymarcus/p/openclaw-aka-moltbot-is-everywhere